The UK’s Online Safety Act 2023 and similar legislation in other jurisdictions are often presented as measures to protect children from harmful content. However, an examination of their provisions shows they also introduce broad new regulatory powers over online platforms, advertising environments and content moderation practices. Critics argue that these measures primarily serve to strengthen government oversight and protect commercial brand interests, with child safety as a secondary effect.

Imagine a world...

…where multi-billion dollar companies lose money due to controversies surrounding advertising. Now, what if their goals aligned with authoritarian governments vying for more control over speech and surveillance? Wouldn't it be useful if there were an over-abundance of NGOs you could fund to achieve your goal?

What might be the outcome of such a world? Welcome to 2025 where we have the Online Safety Act, Digital Services Act and Chat Control.

This might sound all a bit far fetched, but when I found out about the World Federation of Advertisers (WFA) and their now-retired Global Alliance for Responsible Media (GARM) initiative... Believe me when I say I thought I should be breaking out the tin foil hat. Until I found a report from this year, the US House Judiciary Committee dubbing them:

an "Advertising Cartel" uncovering evidence "that some of the world’s largest companies colluded through GARM to control online content and censor disfavored speech."

So what is GARM exactly? In their own words:

The Global Alliance for Responsible Media (GARM) was a voluntary cross-industry initiative created in 2019 to address digital safety. GARM was set up in the wake of the Christchurch New Zealand Mosque shootings during which the killer livestreamed the attack on Facebook. This followed a slew of high-profile cases where brands’ ads appeared next to illegal or harmful content, such as promoting terrorism or child pornography – creating both consumer and reputational issues for brands.

Influence

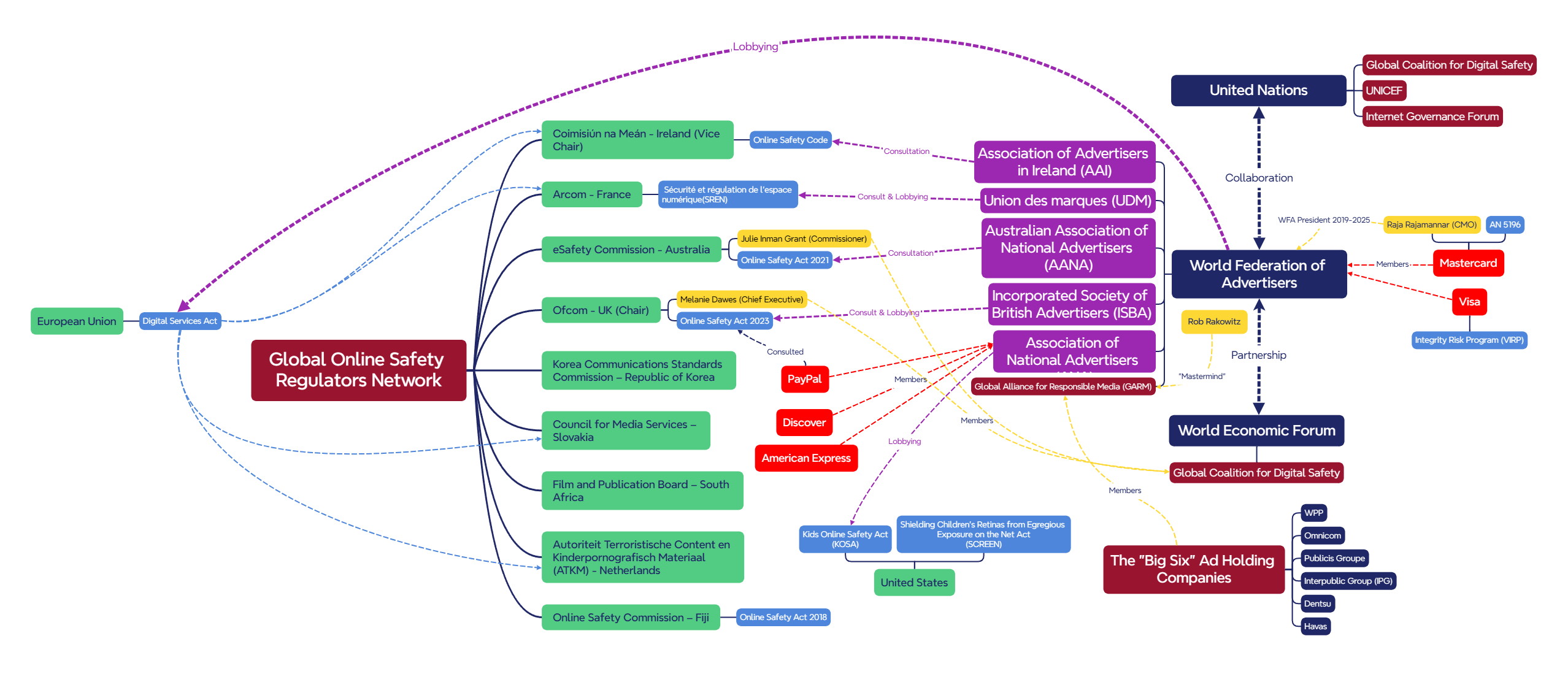

The WFA controls roughly 90% of all global annual advertising spending ($1 trillion dollars), through their global brands and national associations. With resources on this scale, their influence is vast, arguably without limit. Previously advertisers would apply social and financial influence to platforms such as pulling their advertisements, however now laws are being passed to bring these social media platforms and user to user content in line.

In the image below I've tried to map out how the scale and influence of companies on governments and their safety regulators. I have predominantly focused on payment processors due to their ability to financially de-platform platforms, but keep in mind many tech companies and home name brands are also part of these initiatives.

How did we get here?

By 2016, digital ad spending in the UK exceeded spending on more traditional methods of advertising. If we look at television advertising, these networks are tightly controlled by regulators on what content they can show and at what times making it ideal for advertisers. On the other hand, user generated content on digital platforms like Facebook, TikTok, YouTube, Twitter all carry an inherent risk. What if it could be regulated more like television?

After the infamous Adpocalypse in 2017, there was another incident in 2019 which shook the advertising market; the mass shootings that took place at mosques in Christchurch, New Zealand. Brands were being advertised on Facebook during the live-stream of the terrorist's heinous attack. Brands were also featured across the internet as users and news agencies shared videos of the recording.

The WFA made public statements urging platforms to do better and within two weeks of the shootings, the WFA had elected a new president, Raja Rajamannar. Having not long received the WFA Global Marketer of the Year award in 2018, he fulfils this role along side serving as Mastercard's CMO with an already impressive 6 years in the position.

Member brands understandably didn't want to be associated with any harmful, hateful or extremist content. Controversies meant missing opportunities to cement themselves into people's lives and boycotts could directly affect their bottom line. After a string of these controversies, clearly something had to change. Following Raja Rajamannar's election to WFA president, GARM was established. Masterminded by Rob Rakowitz, they formed a partnership with the World Economic Forum.

The Global Alliance for Responsible Media (GARM) has partnered with the World Economic Forum to improve the safety of digital environments, addressing harmful and misleading media while protecting consumers and brands.

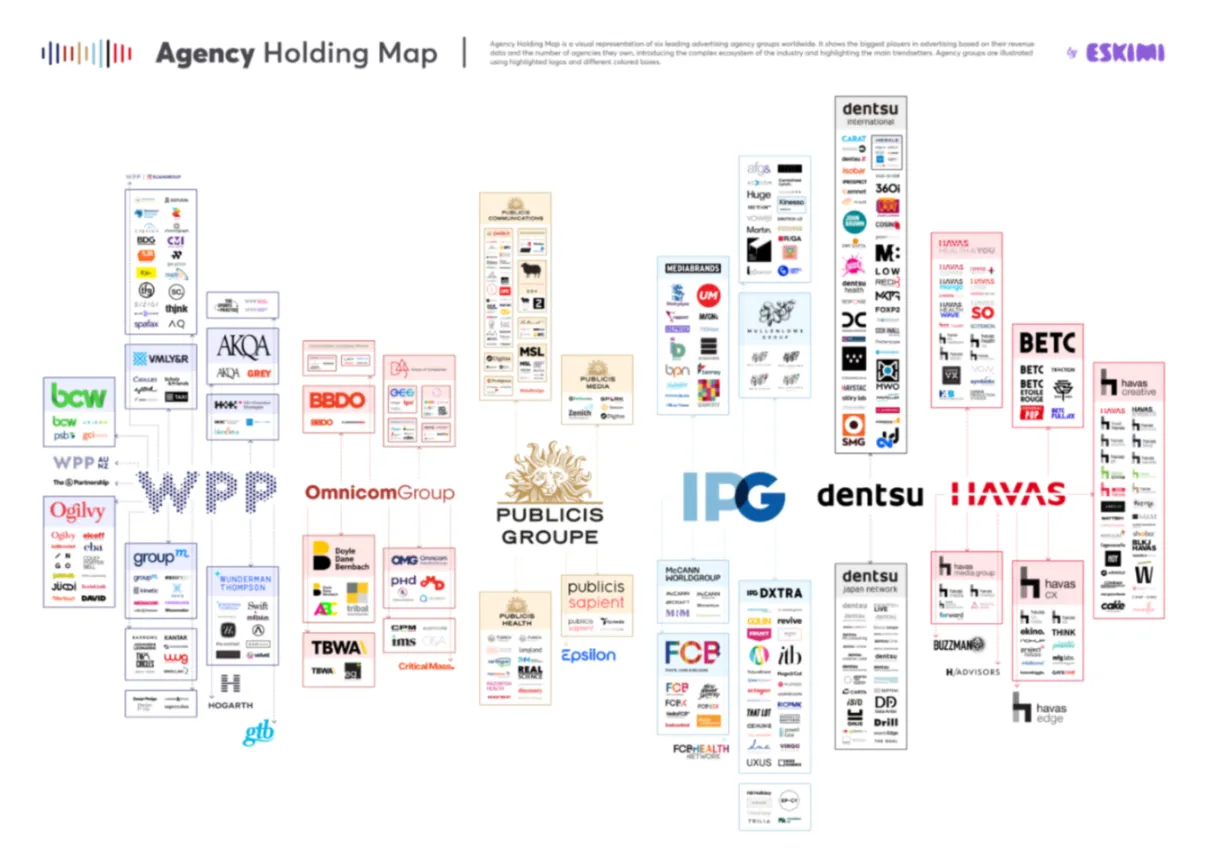

When you talk about GARM, it's sometimes hard to put into perspective how big and influential it was. The largest ad agency holding companies (referred to as the "Big Six") were all members of GARM and a lot of the initiative's policies are still influencing companies today.

For the next 5-6 years, there was continuous lobbying, consultations, white papers and panels pushing the idea that digital safety is of upmost importance. Near the end of this document you can find a heading labelled "Association Efforts", showing sources of different lobbying efforts.

Although it is not unusual that advertisers would want to be involved in legislation that could impact them, the global reach of the WFA's GARM and other supporting factors has to lead one to suspect: with the information we have, what do we still not know about GARM and, now it is shut down, does the WFA still exert the same influence and control?

One of the largest events of note was the purchase of Twitter (now X) by Elon Musk; vowing to turn the platform into a "town square" of free speech, this met with the ire and scorn of advertisers. This followed Elon telling advertisers to "Go Fuck Yourself", a defamation suit against Media Matters and an antitrust suit against the WFA's non-profit GARM initiative, leading to its eventual closure and FTC investigation.

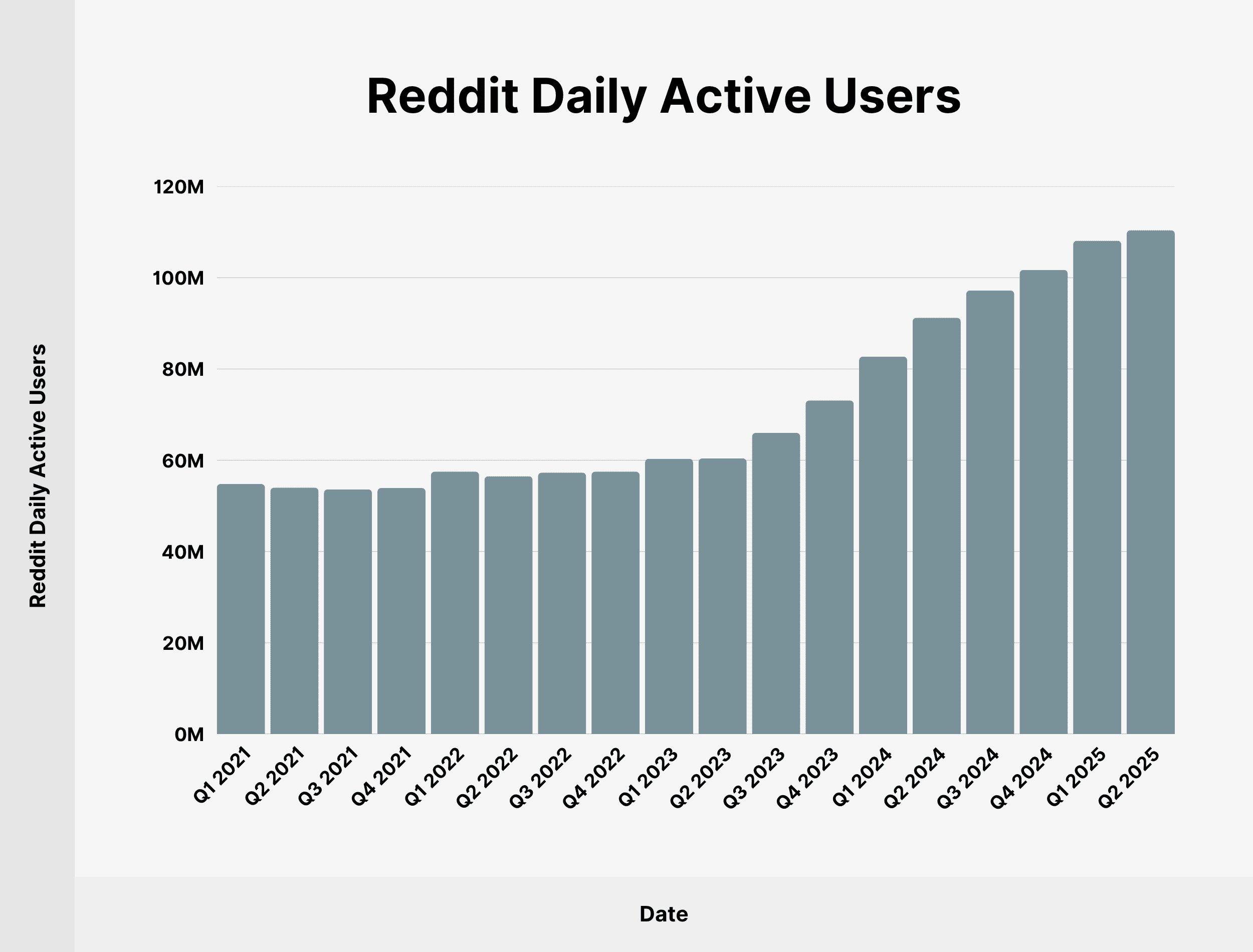

Competitors such as Threads and Bluesky have failed to sufficiently fill the gap for the exodus of left-wing users post-Twitter and post-2024 election, likely finding a home at the left leaning platform Reddit. Since Reddit's IPO they have become an increasingly attractive option for brands, and they are rolling out tons of new ad options, tools and analytics to entice.

Legislation and Technology at work

I will now go over where the interests of companies and government align, how they could both benefit from these measures, and my own opinion.

VPNs as an escape

The UK government has said there are no plans to ban VPNs; the backlash they would face would only introduce unnecessary resistance and ultimately they don't need to. Following the AI boom, more social media platforms either rate limit or block VPN traffic to stop scraping of their valuable user data by AI bots.

All that is needed is industry buy-in and with more and more countries passing their own online safety laws, especially those with the largest market shares, platforms will have to adjust their terms of service to adhere.

At the time of writing, there are now calls for VPN services to institute age verification as they are "a loophole that needs closing”.

Age verification

Company Benefit: In the UK and other countries, the advertising codes contain strict rules to protect children (and young people) from potentially misleading, harmful, or offensive material. But without accounts and age verification, how do you know a user is a child or an adult? You don't want to advertise children’s products to adults and legally you also don't want to advertise adult products to children.

Why a brand may want to target children:

- Developing Consumer Habits: Children represent a significant demographic that can be influenced early in their lives. By targeting them, advertisers aim to establish brand awareness and loyalty from a young age, which can lead to lifelong consumer habits.

- Pester Power: Children often exert influence over family purchasing decisions. Advertisements that appeal to children can lead them to request specific products, effectively leveraging their influence to drive sales.

- Emotional Engagement: Children are generally more impressionable and emotionally driven than adults. Advertisers often use colourful, engaging visuals and relatable characters to capture their attention, making them more receptive to marketing messages.

- Growing Market: The market for children’s products, including toys, clothing, and entertainment, is substantial. Targeting children allows companies to tap into this lucrative market, which can include both direct sales and parent-driven purchases.

- Digital Engagement: With the rise of digital media, children are spending more time online. Advertisers are increasingly utilising platforms like social media, video games, and apps that are popular among children to reach them directly.

- Regulatory Environment: In some regions, there are fewer restrictions on advertising to children compared to advertising to adults. This makes it a more accessible target demographic for marketers.

Overall, the combination of emotional influence, market potential, and the ability to shape future consumer behaviour makes children a lucrative target for advertising campaigns.

Age verification to limit adult content is meaningless while payment networks and brands see fit to become the moral arbiters and remove it for adults.

Government Benefit: People are understandably worried about how their biometric data and IDs could be used to track them across the internet. Anonymity is already hard enough to achieve; use a VPN and try signing up for a Google account or any social media without handing over your phone number or ID.

Verification companies such as Yoti state that facial age estimation selfies are deleted immediately after check, and identity verification documents in some manual-review fallbacks, after up to 28 days. However these may be longer for legal reasons. I imagine this clause is there as part of anti-fraud methods, but could be easily expanded to cover individuals flagged as persons of interest, perhaps after posting something controversial on social media. Now hypothetically, you're being tracked across the internet as the identity verification company has both your id and the site the request came from. This information can then be used to issue warrants to services such as social media.

Age verification is merely a stepping stone to Digital ID, and is even listed on Ofcom's website as a potential method of age verification.

Digital ID - Papers, please

BritCard, the likely next step in government tracking. Coming straight from Labour's think tank Labour Together, and advocated for by the Tony Blair Institute. Not only tracking people's right to work in the country but allowing the government greater oversight over its citizens.

Leveraging digital identity, for instance, would make it harder for traffickers to exploit the UK’s labour market and operate anonymously online, says the non-profit organisation founded by the former British Prime Minister Tony Blair.

The Institute has become known for promoting digital IDs as a solution for unchecked migration, boosting the economy, improving public services and saving money. Earlier this year it also published an analysis on how identity and data exchange can help fight serious and organised crime (SOC), including human trafficking.

Government Benefit: A big part of Digital ID is immigration and reducing fraud.Think AI powered services scanning your digital identity as you pass through borders, make any financial investments, or even go about your day with facial recognition.

One of the key benefits being touted to its users is having all your digital data in one easy-to-access location, giving you more control over who has access to it. And to the government? All your data in one easy-to-access location and now they don't even need to record a justification for accessing your data.

The Data (Use and Access) Act 2025 has recently received royal assent. If digital identity is being used for immigration and right to work, I doubt this will be opt in for immigrants but it is being listed as opt-in for citizens... until it isn't. One use case listed is digital id being used by landlords; how long till legislation states it's required to access the internet? It's easy to see how digital IDs could be abused by current and future governments without safe guards and to combat this, the government has been working on the UK digital identity and attributes trust framework (0.4).

From the Tony Blair Institute, they state adopting Digital ID could result in annual net savings of £10 billion per year. This goes on the premise the digital information could be paired with AI to reduce the public-sector workforce.

Company Benefit: Exclusive deals being offered by insurance companies, on the caveat you provide them with full access to your medical records via your digital identity. This type of data harvesting could apply to other types of insurance like your home or car.

For years, digital advertisers have relied on cookies and similar trackers to identify and profile users. These have been used to track a user's visits across multiple websites, building a profile of interests for targeted ads. Since the EU's "cookie law" came into effect websites have been required to display cookie consent banners, to get permission for marketing and analytics cookies. GDPR also treats many cookies as personal data, meaning consent is needed to process the data they collect, placing strict compliance requirements. Browsers and regulators have been curbing third-party cookies, resulting in a steady decline.

With digital ID, instead of tracking cookies if a website or platform used a government-backed digital identity, the dynamics change significantly. Rather than assigning a cookie to a user, the site can prompt the user to log in or verify via their digital ID. The site then learns who they are or verified traits about them with a high degree of certainty. For example a user logged in with their national digital ID confirms "this is John Doe, aged 30, resident of X country." The site could then personalise the user's experience, content or advertisements without needing to plant a tracking cookie on their machine.

This raises the question: Could advertisers bypass "cookie consent" by using digital identity systems? Yes, if the user willingly uses an ID system to authenticate. However, no cookies does not mean no consent or regulation as digital IDs under GDPR are treated the same as other forms of personal data. Ultimately though a digital ID system leads to better data quality/attribution, precise/verified targeting of ads and fraud reduction.

Companies could incentivise users to log in using their digital IDs with giveaways, promotions and other incentives much like they do now to get people signed up to email mailing lists. However, If digital IDs are as quick and easy to use as Passkeys are now, they will likely face less resistance.

AI and End-to-end Encryption

In the modern world, companies and consumers have become keenly aware of the importance of cyber security. Multiple security breaches have plagued the industry, so how do they restore confidence? Social media platforms are of particular interest where a private conversations leaking could cause either financial or emotional distress.

This is where End-to-end Encryption (E2EE) comes in, information between two parties is encrypted and no one can easily decrypt that information except for the participating parties. Not your ISP, social media platform or law enforcement. Supposedly.

A lot of time and money has gone into improving encryption for both the storage and transfer of data, so much so this has caused issues with investigations. It is always easier to request information of an individual from companies based on a suspected crime being committed rather than file for warrant to get access to the user's devices.

The Online Safety Act requires online platforms to protect users, including children, from harmful content. This has led to concerns that tech companies might be compelled to weaken end-to-end encryption to scan for illegal content, potentially impacting user privacy and security.

Government Benefit: OSA and the EU's Chat Control both raise concerns about AI-driven client-side scanning on your device, while the message is being composed (both content and media). Digital rights groups warn that proposed EU CSAM detection orders risk effectively requiring client-side scanning, including on E2EE services.

The intended purpose is to scan messages for instances of CSAM on digital platforms, however with future creep in legislation or "bad actors" it could create surveillance states. Automated scanners routinely misidentify innocent content, such as vacation photos or private jokes, as illegal, putting ordinary people at risk of false accusations and damaging investigations, while also introducing vulnerabilities for criminals to exploit.

Company Benefit: In the UK, where OSA has pushed the duty of care to social media platforms, it is unlikely any system put in place would be made by the government. Companies will be vying to push their AI tools to be used as a 'drop-in' solution. Larger corporations like Meta would likely build their own closed source solution, giving them the ability to also scan the content of emails to personalise ads seen on their website. We've seen this before, when Google launched Gmail in 2004, emails were scanned for that exact purpose. Google only clarified the practice in 2014 and then later announced the practice would stop in 2017.

Footnotes & References

When I first started researching where the global push for "Online Safety" originated, it started with scrutinising Ofcom, their GOSRN forum and finding links to the World Economic Forum. The more I looked into this the more I felt like I was going crazy, especially when I found the links to the World Federation of Advertisers. Whenever you start believing theories of a large powerful organisations behind the scenes pulling all the strings, it's easy to feel you'll be met with opinions such as 'conspiracy theorist'... That was until I found the report from the US House Judiciary Committee. How do more people not know about this?

There is also evidence to suggest following the death of George Floyd in 2020, the WFA may have also contributed heavily for the push to DEI related programs, which as we know ultimately has failed and proven by their now deleted section.

Additionally there has been a lot of focus and criticism (rightly so) of Collective Shout following the Steam and Itch.io games being delisted. However, to me, rather than masterminds of a grand plot, they seem more like a opportunistic small fry taking advantage of ,and being enabled by, a globalised agenda.

The payment networks (all part of WFA affiliated associations) pushing platforms to remove adult content are the front runners ahead of slow global legislation. And it seems the content they are specifically targeting (for now) is anything that could fall under OSA's 'legal but harmful' category. One has to wonder if this position is motivated by the idea "It may be legal for adults, but we find it perverse and don't want our adverts anywhere near it"; not happy with just content and page exclusions they don't want to be associated with platforms hosting this type of content at all due to the previous backlash driven by agenda driven advocacy groups.

Association Efforts

WFA (Global)

- Created Brand Safety Floor and Suitability Framework for community member organisations and platforms to adhere to.

- GARM and WFA listed among stakeholders for the Digital Services Act

- Active stakeholder through the EU's Digital Services Act process. Submitted a detailed position paper in response to European Commission's DA public consultation. Circulated to members and EU officials. WFA generally supported the DSA’s goals of making online platforms more accountable, especially to curb illegal or harmful content that could damage brands.

- Listed among the over 100 stakeholders providing input to LIBE committee on DSA.

- On-going engagement: 1, 2 & 3

- Cemented role as a de factor partner in the DSA's implementation on advertising matters.

- Joined industry call to ensure legal certainty around data flow in and outside of the EU.

- GARM takes credit for Twitter's revenue decline "you may recognize my name from being the idiot who challenged Musk on brand safety issues. Since then they are 80% below revenue forecasts"

AAI (Ireland)

- Consulted on the Online Safety Code Draft

UDM (France)

- Private meetings with relevant authorities: 1, 2 & 3.

- Public posts and collaboration with partners.

- Director General Jean-Luc Chetrit) emphasized advertisers’ commitment to brand safety, calling for accountability and transparency from big platforms in line with new laws like SREN and the EU Digital Services Act.

- Stakeholder listed in contributors to bill.

AANA (Australia)

- Announced GARM and WFA partnership.

- Lobbying for Industry self-regulation and brand safety, launches a "Code of Ethics" for advertising in Australia.

- Consulted with by the federal government during the act's statutory review process. "It is vital for both online safety and brand safety for advertisers to have control over where their advertising appears and access to behavioural data is the best and most accurate method for ensuring this is done in a responsible manner when advertising online."

- Founding member of Unstereotype Alliance.

ISBA (UK)

- Response to OSA Draft. They do point out "While online safety must be a priority, the potential impact on free speech is obviously a concern." But heavy GARM influence.

- Calling on Ministers following the Queen Speech.

- Advocating with reaction posts: 1 & 2.

- Lobbying Government and regulators outside of formal consultations.

- Met with MPs, ministers and regulators to press advertisers' concerns such as participating in government-led forums such as the Online Advertising Programme (OAP) workshops and taskforces.

- Evidence submitted for Social media, misinformation and harmful algorithms.

- Priorities for 2022 "influencing policy by demonstrating advertisers’ proactive commitment to creating positive outcomes for society and the economy."

- Position

ANA (US) - More free speech and privacy focused

- Voiced support for children's privacy but argued KOSA was overly broad in scope. ANA’s stance was that a single national law would provide clearer standards and avoid the unintended consequences of narrowly targeted bills.

- ANA registered to lobby on KOSA in 2022-23, indicating multiple contacts with lawmakers or staff about the bill.

- Strongly opposed any provisions that would unduly restrict online advertising, especially targeted to minors, by forcing "families to pay huge amounts of money for popular ad-supported digital services".

- Engaged media and its membership on the issue, their 2024 legal conference, panels focused on KOSA/COPPA 2.0

Timeline

Key: Informational, Corrective action or positive for Advertisers, Negative brand or financial for Advertisers

2017

- 09 Feb - The Times Investigation: Big brands fund terror through online adverts

Some of the world’s biggest brands are unwittingly funding Islamic extremists, white supremacists and pornographers by advertising on their websites, The Times can reveal.

- 29 Mar - The Adpocalypse: Google under fire as brands pull advertising and ad industry demands action

Google is facing a hit from other brands. Channel 4 has “pulled all advertising from YouTube with immediate effect. Dan Brooke, its chief communications and marketing officer, explains: “We are extremely concerned about Channel 4 advertising being placed alongside highly offensive material on YouTube. It is a direct contravention of assurances our media buying agency had received on our behalf from YouTube.

- 27 Apr - UK enacts the Digital Economy Act 2017, even with concerns related to part 3 coming into effect on 15 July 2019. Where the age verification regulator "may give notice of that fact to any payment-services provider or ancillary service provider."

2019

- 15 Mar - Mass shootings take place at mosques in Christchurch

- 26 Mar - Raja Rajamannar, Mastercard's CMO named the World Federation of Advertiser's President.

- 28 Mar - World Federation of Advertisers (WFA) is urging brands to put pressure on all social media platforms to do better.

- 08 Apr - UK government publishes the Online Harms White Paper

- 20 Apr - WFA President Raja: "it is not a brand safety issue. It’s a societal safety issue, and as marketers we have a responsibility to society.”

- 18 Jun - The World Federation of Advertisers (WFA) launches the Global Alliance for Responsible Media and partners with the World Economic Forum to "improve the safety of digital environments". and Mastercard are part of this alliance.

- 20 Jun - UK Government announced that implementation would be delayed for about six months. This was because the European Commission hadn’t been notified about the UK’s guidance on AV arrangements.

- 18 Oct - Collective Shout submits discussion paper to AANA Code of Ethics Review Group

- 21 Oct - UK after delaying Part 3 of the Digital Economy Act in June, announces they would not be commenced and instead "would instead be delivered through the Government’s proposals for tackling online harms."

- 11 Dec - Australia begins drafting their Online Safety Act.

2020

- 24 Jun - Mastercard CMO: Put Brand Safety First

The problem hasn’t gone unaddressed. Online platforms have introduced new measures and, as marketers, we’ve seen some incremental improvements like content selection tools and brand safety report cards. However, we have a lot more ground to cover.

- 01 Jul - Due to the Stop Hate for Profit campaign a third of advertisers may boycott Facebook in hate speech revolt

Stephan Loerke, chief executive of the World Federation of Advertisers, told the Financial Times the advertising industry was starting to request big changes from social media platforms. “In all candour,” he said, “ it feels like a turning point.”

- 23 Sep - Facebook, YouTube and Twitter have agreed a deal with major advertisers (WFA) on how they define harmful content.

Garm will decide the definitions for harmful content, setting what it calls "a common baseline", rather than the current situation where they "vary by platform". That makes it difficult for brands to choose where to place their adverts, it said.

- 10 Dec - Mastercard and Visa said they will no longer allow their cards to be used on Pornhub (MindGeek).

- 15 Dec - The EU's Digital Services Act (DSA) is formally proposed by the European Commission.

2021

- 24 Feb - Australian Online Safety Bill 2021 is introduced to Parliament.

- 14 Apr - Mastercard announces enhances to adult content rules.

- 12 May - UK publishes draft of the Online Safety Bill.

- 29 Jun - WEF publishes the Advancing Digital Safety white paper and launches the Global Coalition to Improve Digital Safety, Ofcom being a 'founding' member.

- 23 Jul - Australia's Online Safety Act comes into effect.

- 25 Aug - Mastercard joins IWF in fight to keep internet safe.

- 06 Oct - Ofcom publishes their Video-Sharing platform guidance

ISBA also noted that up until recently, the regulatory environment covering online content has lacked robust data and that they fully supported progress toward new regulation enforced by Ofcom.

2022

- 23 Jan - Australia's Online Safety Act 2021 commences

- 23 May - WEF's annual meeting "Ushering in a Safer Digital Future".

- 04 Aug - Mastercard, Visa suspend ties with ad arm of Pornhub owner MindGeek (TrafficJunky).

- 27 Oct - Elon Musk finalises his purchase of Twitter, announces it will act as a digital town square.

- 16 Nov - EU's Digital Services Act comes into effect

- 14 Nov - UK Ofcom establishes the Global Online Safety Regulators Network with Australia, Fiji and Irelandes the Global Online Safety Regulators Network with Australia, Fiji and Ireland.

2023

- 01 May - Visa replaces Global Brand Protection Program (GBPP) with Visa Integrity Risk Program (VIRP)

- 24 July - Elon Musk rebrands Twitter as X

- 17 Nov - Prominent brands halt their advertising on X

The dizzying pace and scope of the withdrawals from X, the platform formerly known as Twitter, comes amid a widening backlash against Musk for his increasingly vocal endorsement of extremist beliefs.

- 30 Nov - Elon Musk tells advertisers "Go fuck yourself".

“I don’t want them to advertise,” he said at the New York Times DealBook Summit in New York. “If someone is going to blackmail me with advertising or money go f**k yourself. Go. F**k. Yourself,” he said. “Is that clear? Hey Bob, if you’re in the audience, that’s how I feel” he added, referring to Disney CEO Bob Iger, who spoke earlier at the summit on Wednesday.

2024

- 21 May - France passes their SREN law.

- 07 Aug - Meta and Google secretly targeted minors on YouTube with Instagram ads.

The publication reports that Google directed ads to a subset of users labeled as “unknown” in its advertising systems, in an attempt to disguise the group skewed toward teenagers. According to a Google Ads help page, the “unknown” demographic category refers to people whose age, gender, parental status, or household income are supposedly unidentified, and can allow advertisers to reach “a significantly wider audience” when selected.

- 09 Aug - WFA's GARM shuts down after X lawsuit.

The suit alleged that the WFA engaged in anticompetitive behavior and organized an advertising boycott that ultimately damaged X’s financial health.

- 01 Dec - Australia's Digital ID commences.

2025

- 18 Mar - David Wheldon of Allwyn (lotteries operator) succeeds Raja Rajamannar as WFA President.

- 12 Apr - Visa updates it's rules.

- 20 May - "FTC probes Media Matters’ exchanges with ad groups, stoking fears of retribution”

The letter, which CNN has viewed, directs Media Matters to turn over all documents, materials and communications with a range of ad entities and related organizations — including the World Federation of Advertisers and the Global Alliance for Responsible Media — regarding brand safety and disinformation, the source said.

- 27 Jun - House Judiciary Committee releases press report on "GARM's Advertising Cartel".

In recent years, foreign governments have adopted legislation and created regulatory regimes in an effort to target and restrict various forms of online speech. Foreign regulators have even attempted to use their authority to restrict the content that American citizens can view online while in the United States. In particular, the European Commission (EC) and Australia’s eSafety Commissioner have taken steps to limit the types of content that Americans are able to access on social media platforms.

- 14 Jul - Commission publishes an age-verification blueprint designed to interoperate with EUDI Wallets; all Member States must offer a Wallet by 2026, but platforms aren’t required to use EUDI Wallet for age checks.

- 25 Jul - Ofcom begins enforcing the first phase of Online Safety Act duties, including illegal-content rules and age-verification requirements for porn sites.

- 29 Jul - Steam and Itch.io delists games citing stricter rules mandated by payment processors.

- 01 Aug - Mastercard deflects blame for NSFW games being taken down.

Mastercard: "Mastercard has not evaluated any game or required restrictions of any activity on game creator sites and platforms, contrary to media reports and allegations," the company says.

Valve: "Mastercard did not communicate with Valve directly, despite our request to do so," a Valve representative said. "Mastercard communicated with payment processors and their acquiring banks. Payment processors communicated this with Valve, and we replied by outlining Steam’s policy since 2018 of attempting to distribute games that are legal for distribution. "Payment processors rejected this, and specifically cited Mastercard’s Rule 5.12.7 and risk to the Mastercard brand."

- 06 Aug - Media Matters Presses Bid To Halt FTC Probe

- 18 Aug - Mastercard shifts $180M Global Media to WPP Media

Future

- Dec 2025 - Australia's social media ban for children under 16 expected to come into effect.